Table of Contents

My homelab setting #

I recently have been obsessed over homelabs. As "Computers are bad" defines it, it's a bit of the residential version of "on-premises cloud", and is also the kind of work no one pays you to do and feeds only a bit of self-pride. My experience has been nothing but painful (see SaaS: Suffering as a Service), although it has also a way to fill the dread of weekends in Palo Alto without a car and has taught me tons about networking and hardware optimization.

I mainly need on-premises compute to:

- Have a real GPU to to test ML pipelines.

- Run small processes without having to Slurm-it ©.

- Store all my data without having to rely on the cluster storage and not abuse my already old laptop.

- More importantly, not rely so much on cloud services that abuse from our data and charge us a ton of money!.

The Hardware #

The whole thing is set up at my office desk and not at my home. The reason for

that is Stanford's very fast internet (DL: 856.4 Mbit/sl; UL: 813.06 Mbit/s) compared to my actual home (DL: 53 Mbit/s; UL: 10.14 Mb/s), although

there are some cons that I will discuss later. The energy consumption of

the whole rig is quite low, the ZimaBlade, even with heavy loads, never exceeds

10W, and even with GPU loads, the Lenovo box never does more than 40W.

I have the following elements for computing and data storage:

- A used Lenovo P360 Ultra (68 GB RAM, 3 Tb NVMe, NVIDIA T1000 8GB) I got from Ebay.

- A ZimaBlade with 16 GB RAM.

- 2 x 18 Tb Seagate IronWolf (Renewed from Amazon is cheaper, my data is not that important) to serve as NAS disks.

- A unmanaged Netgear switch (1 Gigabit).

Compute #

Most of computations loads happens in the P360 which runs on Debian and does

a decent job running Python jobs and running some services, like a PostgreSQL

database and some Slack bots, amongst these, one to automate HR for my

lab. I sadly have to accept how much I love VSCode remote development and stopped

doing code on the raw terminal, although I am using vim to write this 😇.

I really love this machine, is easy to open and upgrade and is very silent.

Sure, I cannot get the same performance of a 2U server, but is good for now.

I ssh to this machine daily and I couldn't run anything without it.

Storage #

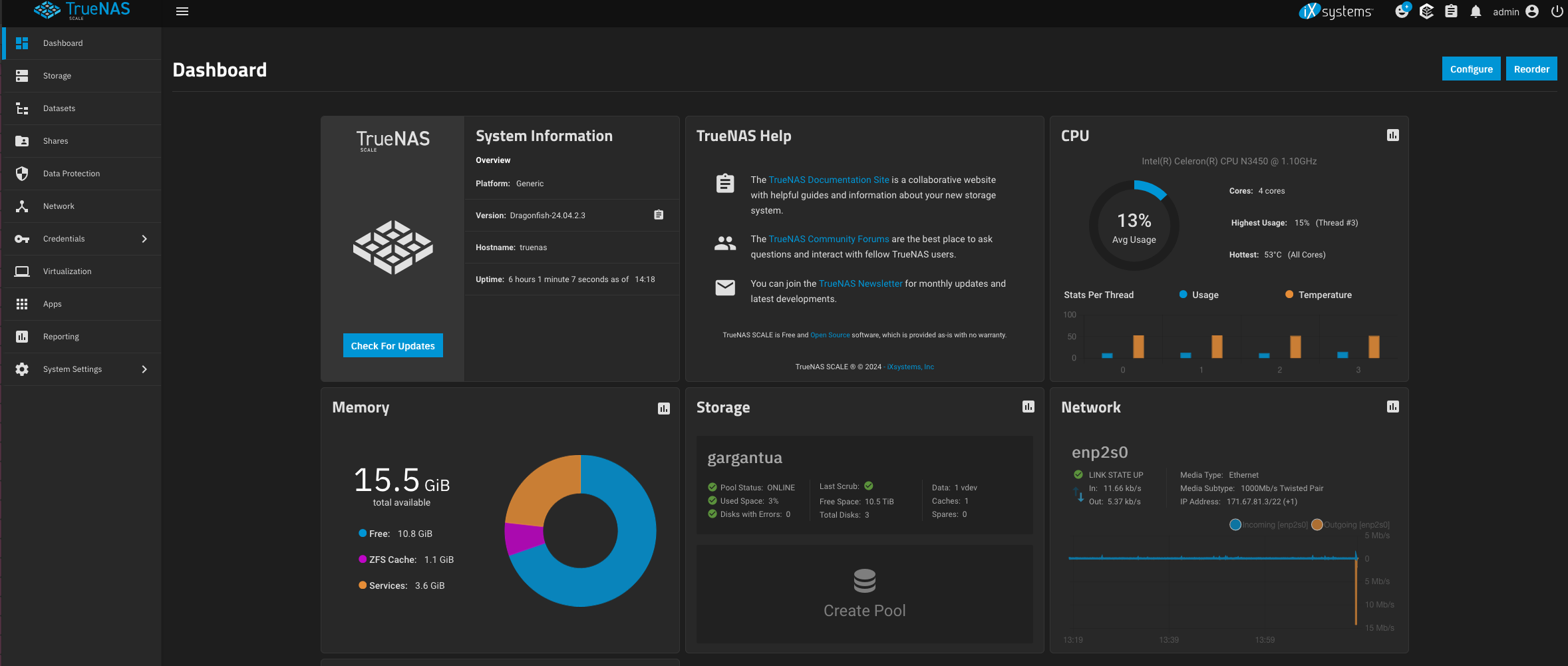

The storage happens in the ZimaBlade which I run as a NAS using TrueNAS Scale. This allow may users to run a ZFS filesystem across storage devices (called pools) where we can also run containerized applications (basically Docker images). I have made my main pool to prioritize writing speed over reading since I keep there data that I download from the web and need to keep up to date (i.e. ERA5 datasets and some Landsat imagery). I run two nice applications on my Zima to administer my storage:

- Cloudfront Tunnel to access the

localhostwithout any VPN or Proxy access - Synchthing to synch data across my devices (mainly my phone, my personal laptop and the storage)

- Nexcloud, but this failed miserably.

Have I mentioned ZFS is amazing? This file system allow me to do mirrored copies on both of my HDDs. So, every time a file is copied to the data pool, is copied on both disks to increase availability in case of failure. This is not a foolproof solution for a backup, but is something. This mirroring strategy effectively reduces the storage to 18 Tb of storage, which while less than 32 Tb, is still good for my purposes.

One of the best features of ZFS and TrueNAS is the Adaptive Replace Cache (ARC), this is a cache that lives in RAM that improves frequent access data read operations. ARC operations are traditionally run on RAM, but in TrueNAS we can use a L2ARC, this is a cache that can live in a faster device to serve as overflow cache if ARC is full. It works like magic and I truly cannot notice when I open files from my NAS or my local device.

In summary I have two layers for storage: fast and slow.

- 1 Tb Nvme SDD inside the P360 and 1 Tb NVMe in the ZimaBlade

- My TrueNAS pool: 18 Tb HDD in the ZimaBlade w/ 1 Tb NVMe for L2ARC Cache for ZFS

Data between devices, including Sherlock, Stanford's cluster, are very fast! I connect

to $OAK using sshfs and to my NAS using NFS. My personal data is living there,

but in a different dataset connected to Syncthing.

Network #

I have two NICs on my wall, but I want to give Ethernet connections to all my devices, including my laptop. To do this, I use a unmanaged switch that just splits the first Ethernet connection to the P360, my laptop, and the NAS ZimaBlade. The second Ethernet connection is connected directly to the P360 that has a second Ethernet card, this allows for fast data transfers between devices.

Stanford's network security is annoying. There is no chance of using WiFi for IoT devices, which is understandable, but that also means that some devices cannot be connected to the network, and obviously you cannot run your own VPS in campus. Every device must be also registered using a software, that while not sniffing packets, it is tracking your MAC address which can be used to locate you and your device across campus, and serves to aggregate internet traffic data (i.e. what is the most common visited webpage across MAC addresses?). During this process, I found this software doesn't like MAC address randomization, which is frustrating; I simply don't want to be tracked, but the internet speed is so good that I stopped caring much.

Having ended this rant, there are many cool stuff out there that I would still

love to try. There are amazing Ikea racks (1) and (2), but also

very cool resources to ask questions, starting

by r/selfhosted and r/homelab.